Summary of NAB'14 from the GPAC Licensing team

Dear followers,

We are back from NAB and we wanted to share with you few projects we found particularly interesting. Some of them we are part of, and some of them which just amazed us.

GPAC demos

GPAC was present on several booths, including Elemental, Akamai, Level3, Red Cameras, 4EVER... and probably many others although we couldn't get confirmation for all of these.

GPAC installation on Elemental Booth at NAB 2014

GPAC installation on Elemental Booth at NAB 2014

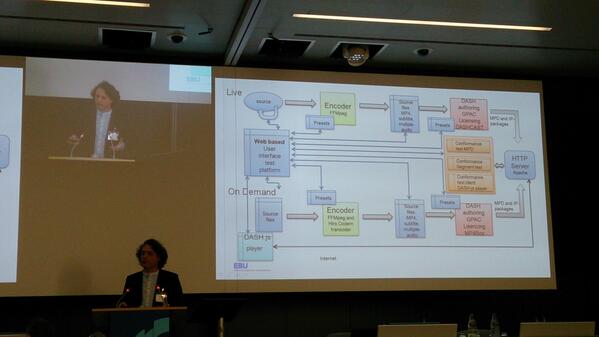

We also attended some talks because we were involved in projects. One of them I'd like to focus on is a partnership with EBU to build an open platform around standards:

Bram Tullemans from EBU presenting the EBU.io open platform. Photo from Nicolas Weil (https://twitter.com/NicolasWeil/status/449103723411668992)

Bram Tullemans from EBU presenting the EBU.io open platform. Photo from Nicolas Weil (https://twitter.com/NicolasWeil/status/449103723411668992)

The first private beta has just launched. More on this project in a few weeks :)

of course we met many people who used GPAC to prepare their demos and using our player, including the people from the Fox labs which already used our player for their HPA Retreat demos (more here).

Other interesting trends

4K is not ready

While most of our playback demos were related to UHD playback, the focus was elsewhere. The Thomson booth was showing a HDR demo that everybody talked about...

The main issue is that the 4K ecosystem is not ready yet for broadcast. We were told there are not enough 4K contents and not enough hardware accelerated decoders. What's more, 4K HEVC encoders seem a bit too pricey at the moment. And pixel depth higher than 8 bits causes bandwith issues with actual graphics cards and connectors. If you are interested in these challenges, we advise you to read the Harmonic White Paper on Ultra HD.

On the other hand, using HD and increasing the frame rate and the color depth (and color space...) is praised by some actors as a transition. 1080p60 seems to be the quickest path to improve the user experience, and TV apparently provides good results at increasing the frame rate. One thing we don't get is why we need to use 10 bits, than 12 bits, then 16 bits pixels since the computation complexity and the internal memory representation is 99% the same for a software player implementation. This is probably related to hardware support, but once more this will cause vendors to have several non-compatible implementations...

Virtualization

There was a strong move toward Virtualization.

This move is already a reality for many encoder manufacturers. Most of them provide all-software solutions, or cloud solutions. They have updated their offer to follow the needs of the OTT video.

Virtualization is the ability to separate the software from the hardware part. If possible with the same capabilities, including for content protection and the same APIs. As a first step, for the Broadcast industry, this would still run at the operators/content providers dedicated server room. But the door is clearly open for processing some data in "the cloud" i.e. any public infrastructure. BBC moved its OTT iPlayer infrastructure with some issues, while the Sochi olympics from NBC were all virtualized with success. This will obviously allow to reduce the costs, but is the infrastructure ready?

The real innovation is to see the biggest encoder manufacturers pushing this technology as if it were mainstream. Broadcast as a service. It is as if they were pushing for the old terrestrial and satellite networks obsolescence.

I'd like to thank the OVF Squad members for their insights on the subject (for French speakers).